Hidden variables and neural networks for complex plasma physics

Research by Archis Joglekar and Alexander Thomas published in Machine Learning: Science and Technology.

Research by Archis Joglekar and Alexander Thomas published in Machine Learning: Science and Technology.

Understanding the behavior of fluids often requires the use of complex equations that need extra information to fill in the gaps about the interactions of tiny particles in the fluid. For example, when studying fluids with high-speed flows, a special set of rules is needed to account for the turbulence. When dealing with really small particles in the fluid, another set of rules is needed to consider how they move around.

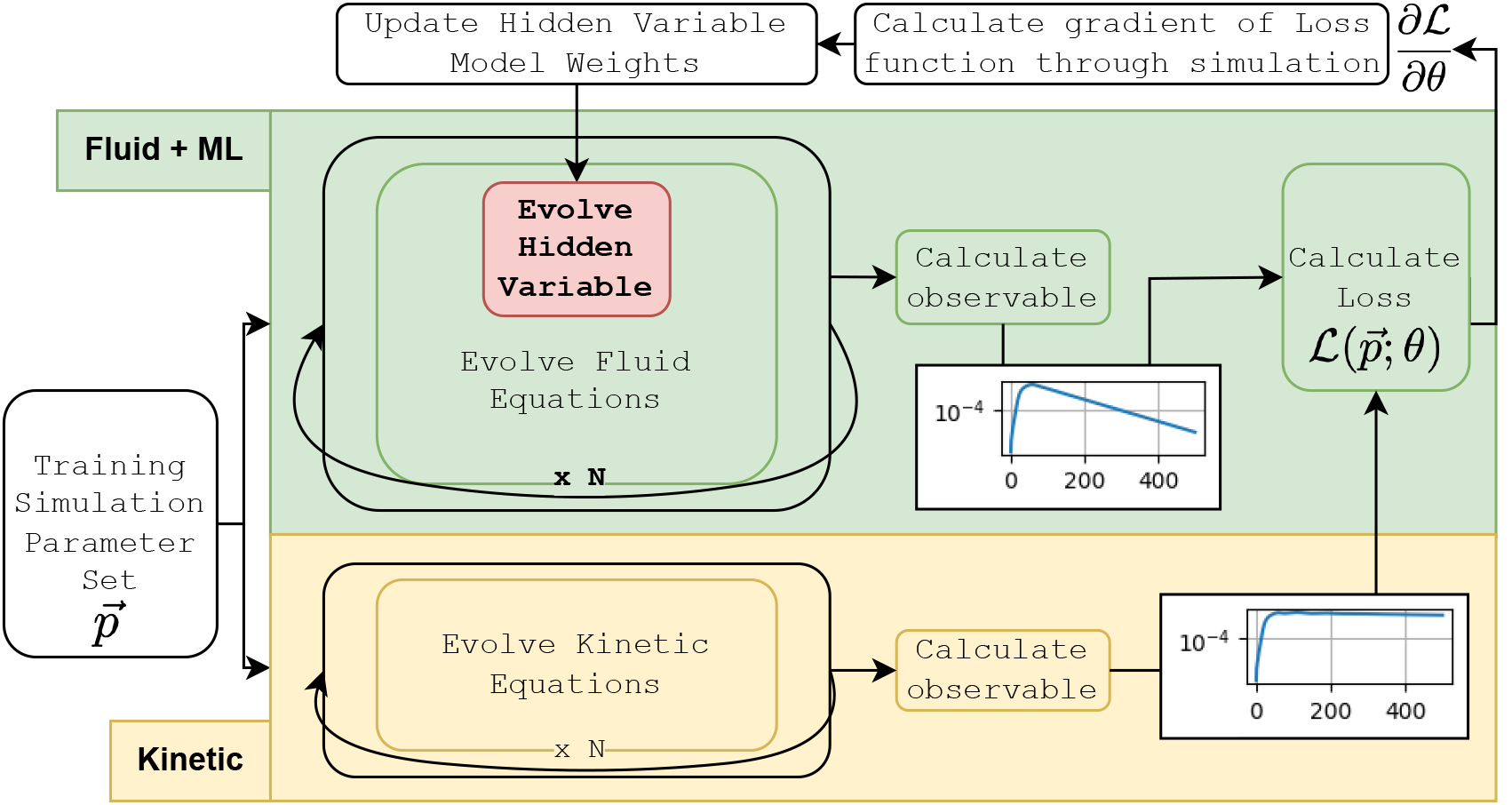

To tackle these equations, NERS adjunct assistant professor Archis Joglekar and professor Alexander Thomas leveraged a concept known as “hidden variables.” These hidden variables allowed them to grasp the fine-scale intricacies of fluid behavior, even though these variables are not directly observable. To uncover information about these hidden variables, they turned to a more comprehensive model that encompassed all the minute intricacies of fluid behavior.

“Broadly speaking, our research leverages the recent, transformative advances in machine learning into supercharging numerical simulations and data analysis,” said Joglekar, the principal investigator on the project. “ In this work, we apply machine learning to bridge the gap between fluid mechanics and the more general statistical mechanics. Statistical mechanics, in its full glory, allows more complex and intricate behavior than fluid mechanics does. By incorporating and training neural networks inside of the fluid mechanics system of equations, we enable the fluid model to reproduce these intricate phenomena that are normally reserved for statistical mechanics. This capability has only recently become possible due to the sizable advances in machine learning.”

In their research, they utilized a computer program proficient in handling these equations and trained it to collaborate with neural networks, which are a type of machine-learning model inspired by the structure and functioning of the human brain. These neural networks assisted in predicting the values of the hidden variables by analyzing the outcomes of more detailed simulations. This innovative approach empowered them to investigate complex plasma physics, a domain typically explored using more intricate simulation, and therefore, more computationally expensive, methods.

Their work demonstrated a means to employ simplified equations to replicate the behavior of fluids and small particles in intricate scenarios, thanks to the incorporation of hidden variables and neural networks.

Joglekar and Thomas believe that using their model based on machine-learned hidden variables could be helpful for understanding many different things, not just in plasma physics. One important example is when dealing with problems like laser-plasma instabilities, which create tricky hot electrons that can mess up experiments trying to achieve fusion. If researchers can create accurate models that show how these hot electrons behave and how much energy they have in fusion experiments, it could make predictions much more accurate.

Their paper on the research, “Machine learning of hidden variables in multiscale fluid simulation” was published in Machine Learning: Science and Technology.

The research was funded by the Department of Energy and the National Nuclear Security Administration.